What Is Low Latency and Who Needs It? (Update)

September 8, 2021

Here’s a dirty secret: When it comes to streaming media, it’s rare that “live” actually means live. Say you’re at home watching a live streamed concert, and you see an overly excited audience member jump onstage. Chances are, that event occurred at the concert venue 30 seconds prior to when you saw it on your screen.

Why? Because it takes time to pass chunks of data from one place to another. This delay between when a camera captures video and when the video is displayed on a viewer’s screen is called latency, and efforts keep this delay in the sub-five-second range allow for low-latency streaming.

Table of Contents

- What Is Latency

- What Is Low Latency

- When Is Low Latency Important

- What Category of Latency Fits Your Scenario

- Who Needs Low Latency

- How Does Low-Latency Streaming Work

- Low-Latency Streaming Protocols

- Conclusion

What Is Latency?

Latency describes the delay between when a video is captured and when it’s displayed on a viewer’s device. Passing chunks of data from one place to another takes time, so latency builds up at every step of the streaming workflow. The term glass-to-glass latency is used to represent the total time difference between source and viewer. Other terms, like ‘capture latency’ or ‘player latency,’ only account for lag introduced at a specific step of the streaming workflow.

Get the low latency streaming guide

Understand the critical capabilities required to provide interactive live streaming experiences.

Download FreeWhat Is Low Latency?

So, if several seconds of latency is normal, what is considered low latency? When it comes to streaming, low latency describes a glass-to-glass delay of five seconds or less.

That said, it’s a subjective term. The popular Apple HLS streaming protocol defaults to approximately 30 seconds of latency (more on this below), while traditional cable and satellite broadcasts are viewed with about a five-second delay behind the live event.

However, some people need even faster delivery. For this reason, separate categories like ultra low latency and near real-time have emerged, coming in at under one second. This group of streaming applications is usually reserved for interactive use cases, two-way chat, and real-time device control (think live streaming from a drone).

To explore current trends and technologies around low-latency streaming, Wowza’s Justin Miller, video producer, sits down with Barry Owen, vice president of solutions services, in the video below.

When Is Low Latency Important?

No one wants notably high latency, of course — but in what contexts does low latency truly matter?

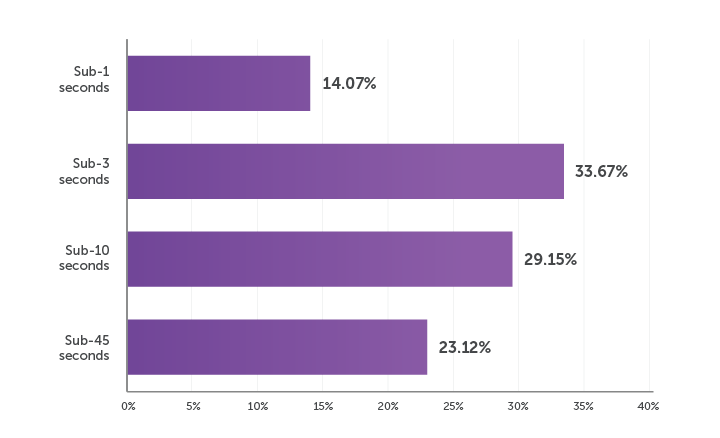

Oftentimes, a slight delay isn’t problematic. In fact, the majority of broadcasters surveyed for our 2021 Video Streaming Latency Report (53%) indicated that they were experiencing latency in the 3-45 second range.

How much latency are you currently experiencing?

Returning to our concert example, it’s often irrelevant if 30 seconds pass before viewers find out that the lead guitarist broke a string. And attempting to deliver the live stream quicker can introduce additional complexity, costs, obstacles with scaling, and opportunities for failure.

That said, for some streaming use cases — especially those requiring interactivity — low latency is a business-critical consideration.

What Category of Latency Fits Your Scenario?

You’ll want to differentiate between the multiple categories of latency and determine which is best suited for your streaming scenario. These categories include:

- Near real time for video conferencing and remote devices

- Low latency for interactive content

- Reduced latency for live premium content

- Typical HTTP latencies for linear programming and one-way streams

You’ll notice in the chart below that the more passive the broadcast, the higher it’s acceptable for latency to climb.

You’ll also notice that the two most common HTTP-based protocols (HLS and MPEG-DASH) are at the high end of the spectrum. So why are the most popular streaming protocols also sloth-like when it comes to measurements of glass-to-glass latency?

Due to their ability to scale and adapt, HTTP-based protocols ensure reliable, high-quality experiences across all screens. This is achieved with technologies like buffering and adaptive bitrate streaming — both of which improve the viewing experience by increasing latency.

Balancing Latency With Quality

High resolution is great. But what about scenarios where a high-quality viewing experience requires lightning-fast delivery? Say you’re streaming a used car auction. Timely bidding will take precedent over picture quality any day of the week.

For some use cases, getting the video where it needs to go quickly is more important than 4K resolution. More examples to follow.

Who Needs Low Latency?

Let’s take a look at a few streaming use cases where minimizing video lag is undeniably important.

Second-Screen Experiences

If you’re watching a live event on a second-screen app (such as a sports league or official network app), you’re likely running several seconds behind live TV. While there’s inherent latency for the television broadcast, your second-screen app needs to at least match that same level of latency to deliver a consistent viewing experience.

For example, if you’re watching your alma mater play in a rivalry game, you don’t want your experience spoiled by comments, notifications or even the neighbors next door celebrating the game-winning score before you see it. This results in unhappy fans and dissatisfied (often paying) customers.

Today’s broadcasters are also integrating interactive video chat with large-scale broadcasts for things like watch parties, virtual breakout rooms, and more. These multimedia scenarios require low-latency video delivery to ensure a synced experience free of any spoilers.

Video Chat

This is where ultra low latency “real-time” streaming comes into play. We’ve all seen televised interviews where the reporter is speaking to someone at a remote location, and the latency in their exchange results in long pauses or the two parties talking over each other. That’s because the latency goes both ways. Maybe it takes a full second for the reporter’s question to make it to the interviewee, but then it takes another second for the interviewee’s reply to get back to the reporter. These conversations can turn painful quickly.

When true immediacy matters, about 500 milliseconds of latency in each direction is the upper limit. That’s short enough to allow for smooth conversation without awkward pauses.

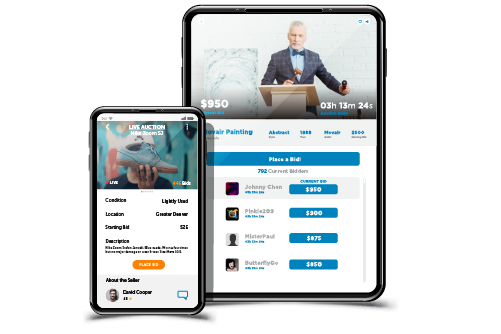

Betting and Bidding

Activities such as auctions and sports-track betting are exciting because of their fast pace. And that speed calls for real-time streaming with two-way communication.

For instance, horse-racing tracks have traditionally piped in satellite feeds from other tracks around the world and allowed their patrons to bet on them online. Ultra low latency streaming eliminates problematic delays, ensuring that everyone has the same opportunity to place their bets in a time-synchronized experience. Similarly, online auctions and trading platforms are big business, and any delay can mean bids or trades aren’t recorded properly. Fractions of a second can mean billions of dollars.

Video Game Streaming and Esports

Anyone who’s yelled “this game cheats!” (or more colorful invectives) at a screen knows that timing is critical for gamers. Sub-100-millisecond latency is a must. No one wants to play a game via a streaming service and discover that they’re firing at enemies who are no longer there. In platforms offering features for direct viewer-to-broadcaster interaction, it’s also important that viewer suggestions and comments reach the streamer in time for them to beat the level.

Remote Operations

Any workflow that enables a physically distant operator to control machines using video requires real-time delivery. Examples range from video-enabled drill presses and endoscopy cameras to smart manufacturing and digital supply chains.

For these scenarios, any delay north of one second could be disastrous. Maintaining a continuous feedback loop between the device and operator is also key, which often requires advanced architectures leveraging timed metadata.

Real-Time Monitoring

Coastguards use drones for search-and-rescue missions, doctors use IoT devices for patient monitoring, and military-grade bodycams help connect frontline responders with their commander. Any lag could mean the difference between life and death for these applications, making low latency critical.

Real-time monitoring is also well entrenched in the consumer world — powering everything from pet and baby monitors to wearable devices and baby monitors. Whether communicating with the delivery person via a doorbell cam or monitoring the respiratory rate of a newborn, many of these video-enabled devices let viewers play an active role.

Interactive Streaming and User-Generated Content

From digital interactive fitness to social media sites like TikTok, interactivity streaming is becoming the norm. This combination of internet-based video delivery and two-way data exchange boosts audience engagement by empowering viewers to influence live content via participation.

For example, Peloton incorporates health, video, and audio feedback into an ongoing experience that keeps their users hooked. Likewise, e-commerce websites like Taobao power influencer streaming in the retail industry. End-users become contributing members in these types of broadcasts, making speedy delivery essential to community participation.

How Does Low-Latency Streaming Work?

Now that you know what low latency is and when it’s important, you’re probably wondering, how can I deliver lightning-fast streams?

As with most things in life, low-latency streaming involves tradeoffs. You’ll have to balance three factors to find the mix that’s right for you:

- Encoding efficiency and device/player compatibility.

- Audience size and geographic distribution.

- Video resolution and complexity.

The streaming protocol you choose makes a big difference, so let’s dig into that.

Apple HLS is the most widely used protocols for stream delivery due to its reliability — but it wasn’t originally designed for true low-latency streaming. As an HTTP-based protocol, HLS streams chunks of data, and video players need a certain number of chunks (typically three) before they start playing. If you’re using the default chunk size for traditional HLS (6 seconds), that means you’re already lagging significantly behind. Customization via tuning can cut this down, but your viewers will experience more buffering the smaller you make those chunks.

Low-Latency Streaming Protocols

Luckily, emerging technologies for speedy delivery are gaining traction. Here’s a look at the fastest protocols available in 2021.

RTMP

RTMP delivers high-quality streams efficiently, and remains in use by the majority of broadcasters. This protocol remains in use for speedy video contribution, but has disappeared from the publishing end of most workflows.

Benefits:

- Low latency and well established.

- Supported by most encoders and media servers.

- Required by many social media platforms.

Limitations:

- RTMP has died on the playback side and thus is no longer an end-to-end technology.

- As a legacy protocol, RTMP ingest will likely be replaced by more modern, open-source alternatives like SRT.

SRT

SRT is popular for use cases involving unstable or unreliable networks. As a UDP-like protocol, SRT is great at delivering high-quality video over long distances, but it suffers from player support without a lot of customization. For that reason, it’s more commonly used for transporting content to the ingest point, where it’s transcoded into another protocol for playback.

Benefits:

- An open-source alternative to proprietary protocols.

- High-quality and low-latency.

- Designed for live video transmission across unpredictable public networks.

- Accounts for packet loss and jitter.

Limitations:

- Not natively supported by all encoders.

- Still being adopted as newer technology.

- Not widely supported for playback.

WebRTC

WebRTC is growing in popularity as an HTML5-based solution that’s well-suited for creating browser-based applications. The open-source technology allows for low-latency delivery in a browser-based, Flash-free environment; however, it wasn’t designed for one-to-many broadcasts.

By leveraging Wowza’s WebRTC streaming solutions, broadcasters are able to overcome these hurtles, ensuring sub-second delivery to viral audiences. And because WebRTC can be used from end-to-end, it’s gaining traction as both an ingest and delivery format.

Benefits:

- Easy, browser-based contribution.

- Low latency and supports interactivity at 500-millisecond delivery.

- Can be used end-to-end for some use cases.

Limitations:

- Not the best option for broadcast-quality video contribution due to certain features to enable near real-time delivery.

- Difficult to scale without a streaming platform like Wowza.

Low-Latency HLS

Low-Latency HLS is the next big thing when it comes to low-latency video delivery. The spec promised to achieve sub-two-second latencies at scale — while also offering backward compatibility to existing clients. Large-scale deployments of this HLS extension require integration with CDNs and players, and vendors across the streaming ecosystem are working to add support.

Benefits:

- All the benefits of HLS — with lightning-fast delivery to boot.

- Ideal for interactive live streaming at scale.

Limitations:

- As an emerging spec, vendors are still implementing support.

Low-Latency CMAF for DASH

Similar to Low-Latency HLS, Low-Latency CMAF for DASHis an alternative to traditional HTTP-based video delivery supported by Akamai. While still in its infancy, the technology shows promise of delivering super-fast video at scale.

Benefits:

- All the benefits of MPEG-DASH plus low-latency delivery.

Limitations:

- Doesn’t play back on as many devices as HLS because Apple doesn’t support it.

- Still an emerging technology that’s yet to be championed by many vendors.

Conclusion

For whatever low-latency protocol you choose to employ, you’ll want streaming technology that provides you with fine-grained control over latency, video quality, and scalability while offering the greatest flexibility. That’s where Wowza comes in.

With a wide variety of features, projects, and protocol-compatibilities, there’s a Wowza low-latency solution for every use case. Whether you’re broadcasting with Wowza Streaming Engine or Wowza Streaming Cloud, we have the technology to get your streams from camera to screen with unmatched speed, reliability, quality, and resiliency.